Optionally, you can verify your installation via: $ git clone īefore understanding what’s new in PyTorch 2.0, let us first understand the fundamental difference between eager and graph executions ( Figure 1).įigure 1: Eager vs. $ docker run -gpus all -it ghcr.io/pytorch/pytorch-nightly:latest /bin/bashīe sure to specify -gpus all so that your container can access all your GPUs. $ docker pull ghcr.io/pytorch/pytorch-nightly However, if you don’t have CUDA 11.6 or 11.7 installed on your system, you can download all the required dependencies in the PyTorch nightly binaries with docker.

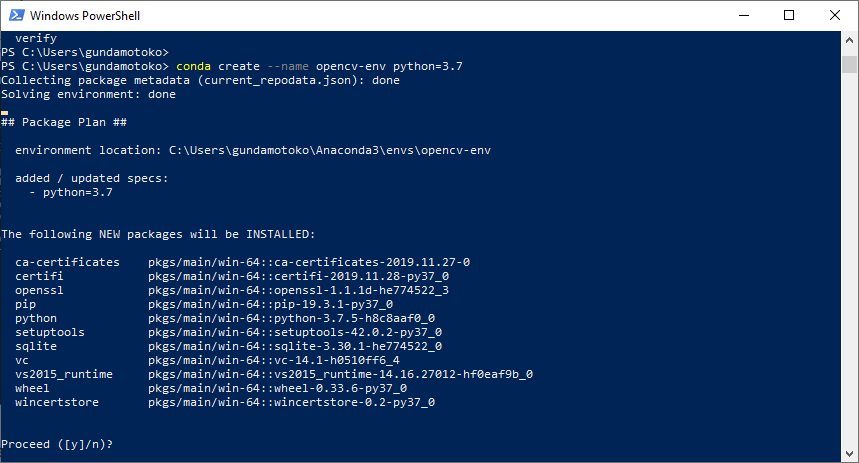

Here’s how you can install the PyTorch 2.0 nightly version via pip:įor CUDA version 11.7 $ pip3 install numpy -pre torch -force-reinstall -extra-index-url įor CUDA version 11.6 $ pip3 install numpy -pre torch -force-reinstall -extra-index-url However, to successfully install PyTorch 2.0, your system should have installed the latest CUDA (Compute Unified Device Architecture) versions (11.6 and 11.7). Like previous versions, PyTorch 2.0 is available as a Python pip package. We start this lesson by learning to install PyTorch 2.0. Looking for the source code to this post? Jump Right To The Downloads Section To learn what’s new in PyTorch 2.0, just keep reading. What’s Behind PyTorch 2.0? TorchDynamo and TorchInductor.

What’s New in PyTorch 2.0? pile (today’s tutorial).This lesson is the 1st of a 2-part series on Accelerating Deep Learning Models with PyTorch 2.0: In this series, you will learn about Accelerating Deep Learning Models with PyTorch 2.0. This blog series aims to understand and test the capabilities of PyTorch 2.0 via its beta release. The stable release of PyTorch 2.0 is planned for March 2023. On December 2, 2022, the team announced the launch of PyTorch 2.0, a next-generation release that will make training deep neural networks much faster and support dynamic shapes. Thus, to leverage these resources and deliver high-performance eager execution, the team moved substantial parts of PyTorch internals to C++. However, over all these years, hardware accelerators like GPUs have become 15x and 2x faster in compute and memory access, respectively. With continuous innovation from the PyTorch team, PyTorch has moved from version 1.0 to the most recent version, 1.13. It has provided some of the best abstractions for distributed training, data loading, and automatic differentiation. Since the launch of PyTorch in 2017, it has strived for high performance and eager execution. The success of PyTorch is attributed to its simplicity, first-class Python integration, and imperative style of programming. Over the last few years, PyTorch has evolved as a popular and widely used framework for training deep neural networks (DNNs). Evaluating Convolutional Neural Networks.Parsing Command Line Arguments and Running a Model.

Accelerating Convolutional Neural Networks.Configuring Your Development Environment.

0 kommentar(er)

0 kommentar(er)